AI Interview Tools: Legal and Ethical Risks

AI interview tools speed hiring but embed bias, privacy risks, and legal exposure—employers and jobseekers must adopt transparency and human oversight.

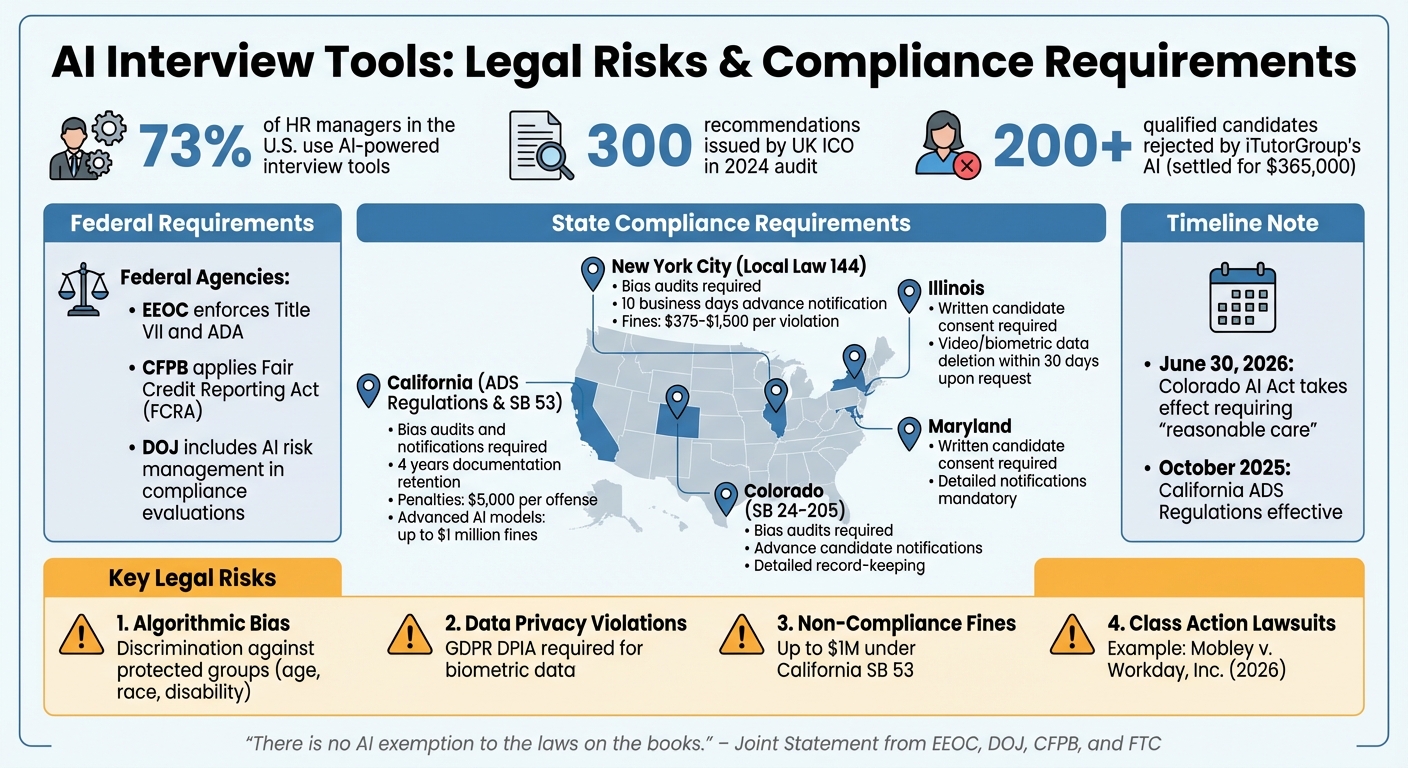

AI-powered interview tools are reshaping hiring, with 73% of HR managers in the U.S. using them to streamline processes. These tools can analyze resumes, video interviews, and even facial expressions, offering speed and cost savings. However, they bring legal and ethical risks, including bias, data privacy concerns, and regulatory challenges. Recent lawsuits, like Mobley v. Workday, Inc., highlight how algorithms can discriminate against protected groups, such as older or disabled applicants. States like California, New York, and Colorado now require bias audits and candidate notifications to ensure compliance. Employers must balance automation with human oversight to avoid penalties and build trust.

For job seekers, AI tools can misinterpret non-traditional resumes or exclude candidates unfairly. Services like scale.jobs address these issues by using human oversight to optimize applications for both AI systems and recruiters. This ensures compliance with laws and reduces the risks of algorithmic errors.

Key takeaways:

- Legal risks: Algorithmic bias, data privacy violations, and non-compliance fines (up to $1M under California’s SB 53).

- Ethical concerns: Lack of transparency, automation bias, and accessibility barriers.

- Solutions for job seekers: Platforms like scale.jobs offer ATS-optimized resumes and manual review to navigate AI hiring tools effectively.

Employers and job seekers alike must prioritize compliance, transparency, and fairness in this evolving hiring landscape.

The Ethics of AI in HR Bias, Transparency, and the Future of Fair Decision-Making

Legal Risks of AI Interview Tools

AI Hiring Tools Legal Risks and Compliance Requirements by State

Regulatory Compliance Requirements

Employers using AI interview tools must carefully navigate federal and state laws to avoid legal pitfalls. On the federal level, the Equal Employment Opportunity Commission (EEOC) enforces Title VII and the Americans with Disabilities Act (ADA), ensuring AI systems don't unfairly impact protected groups or exclude individuals with disabilities. Additionally, the Fair Credit Reporting Act (FCRA), as interpreted by the Consumer Financial Protection Bureau (CFPB), applies when AI tools monitor or evaluate employees.

State laws add another layer of complexity. For example, New York City's Local Law 144, California's ADS Regulations, and Colorado's SB 24-205 all require bias audits, advance candidate notifications, and detailed record-keeping. These requirements vary, with some jurisdictions mandating up to 4 years of documentation retention and others requiring notifications at least 10 business days before using AI tools.

Federal agencies are also stepping up enforcement. The Department of Justice now expects companies to manage AI risks as part of their corporate compliance programs, which can influence penalties in investigations. Violations can result in hefty fines: California's AI transparency rules impose penalties of $5,000 per offense, while developers of advanced AI models under SB 53 could face fines up to $1 million for non-compliance with safety protocols.

Beyond regulatory concerns, AI tools may unintentionally perpetuate discrimination due to underlying biases in their design.

Discrimination and Algorithmic Bias

AI tools can unintentionally reinforce discrimination, often due to biases in their training data or technical limitations in recognizing diverse traits like facial features or voice patterns. Some tools even rely on "proxy indicators" - data points that indirectly correlate with protected characteristics such as race, gender, or disability - to evaluate candidates, which can lead to biased outcomes.

A notable case highlighting these risks involves Workday, Inc. In January 2026, Judge Rita Lin of the U.S. District Court for the Northern District of California expanded a lawsuit against the company into a nationwide class action. Plaintiff Derek Mobley claimed that Workday's AI-powered screening tools disproportionately rejected older, Black, and disabled applicants. The court certified a class of applicants over 40 under the Age Discrimination in Employment Act (ADEA), signaling that courts are increasingly willing to hold AI vendors accountable as "agents" of employers.

"Such technology may inappropriately screen out individuals with speech impediments... the same concerns would apply to individuals with visible disabilities, or disabilities that affect their movements." - Charlotte Burrows, Chair, U.S. Equal Employment Opportunity Commission

In 2024, the UK Information Commissioner's Office audited AI recruitment tools and issued nearly 300 recommendations to address fairness and privacy concerns. Some systems were found to infer sensitive traits, such as gender or ethnicity, from a candidate's name rather than collecting such data directly. For job seekers worried about how AI evaluates their applications, working with a job search virtual assistant or resume writing services can ensure their materials are optimized while avoiding unintended algorithmic bias.

While legal frameworks address compliance, they also emphasize the importance of candidate consent and responsible data handling.

Data Privacy and Candidate Consent

Employers must meet stringent requirements when collecting, storing, and using candidate data. Under the General Data Protection Regulation (GDPR), companies must conduct a Data Protection Impact Assessment (DPIA) if AI processes sensitive or high-risk data, such as biometric analysis. In the U.S., states like Illinois, Maryland, and New York City mandate written candidate consent and detailed notifications before using AI tools. These notifications must clearly outline the job qualifications being assessed.

Data minimization is another critical aspect. AI tools often collect excessive personal information, such as scraping social media profiles, which may not be relevant to the hiring process. Employers are also required to implement strict data deletion policies. For instance, Illinois law demands that video and biometric data be deleted within 30 days upon a candidate's request. These measures not only ensure compliance but also highlight the ethical challenges of automated hiring systems.

Ethical Problems in AI Hiring

AI hiring tools may promise efficiency, but they also bring ethical challenges that can erode trust and fairness in the hiring process.

Transparency and Disclosure Obligations

One of the biggest ethical concerns is the lack of transparency in how AI hiring tools evaluate candidates. Employers often fail to disclose what traits or behaviors are being assessed, leaving candidates in the dark about how their applications are being judged. Many applicants are unaware of whether their word choice, facial expressions, tone of voice, or other factors are influencing their scores. This lack of clarity creates a power imbalance, making it difficult for candidates to prepare or understand why they may have been rejected.

For instance, New York City's AEDT law requires employers to notify candidates at least 10 business days before using AI tools. However, the law doesn’t require companies to explain the exact criteria being used. Without this information, candidates can’t make informed choices about proceeding with the interview or requesting accommodations.

"Utilizing tools that can be explained to the candidates being evaluated, and being transparent about the ways in which the tools will be used, will not only assist employers in complying with applicable laws and regulations, but also increase employer credibility with candidates." - Nathaniel M. Glasser, Member of the Firm, Epstein Becker & Green, P.C.

Audits of AI hiring systems have shown that many tools infer sensitive characteristics like gender or ethnicity based on a candidate's name instead of collecting accurate data. This kind of opacity can perpetuate harmful biases and assumptions, further undermining fairness.

Automation vs. Human Review

Over-reliance on AI in hiring decisions can lead to automation bias, where recruiters blindly trust algorithmic outputs without verifying their accuracy. This can result in unfair or discriminatory outcomes, as algorithms often fail to account for context or nuance.

A striking example occurred in August 2023, when iTutorGroup settled an EEOC case for $365,000 after its AI software automatically rejected applicants over age 55 (women) or 60 (men). This algorithmic bias affected over 200 qualified candidates, who were screened out without any human review. Similarly, Amazon faced backlash in 2018 for an internal AI recruiting tool that penalized resumes mentioning "women's" or linked to all-women's colleges. The tool was trained on a decade of resumes, most of which came from men, reflecting historical biases that the algorithm replicated.

To prevent such issues, employers must prioritize human oversight in hiring decisions. AI should never act as the sole decision-maker. For example, candidates with disabilities or those requesting alternative assessments should have access to manual reviews. For job seekers concerned about these biases, using a job application service can help ensure their resumes are optimized for both AI resume builders and human review. These cases highlight the importance of maintaining a balance between automation and human judgment.

Candidate Experience: Trust and Access Barriers

AI-driven hiring systems often leave candidates feeling uneasy, especially when the process lacks transparency. Many applicants are uncomfortable not knowing how their responses are scored or what data is being collected. This uncertainty can damage employer credibility and discourage talented candidates from completing the application process.

Accessibility is another pressing issue. AI tools for video interviews may inadvertently exclude candidates with speech impediments, visible disabilities, or non-native accents. These tools often struggle with individuals whose communication styles differ from the training data, leading to inaccurate assessments. Moreover, many systems fail to provide clear instructions for requesting accommodations, which can violate the Americans with Disabilities Act (ADA).

"AI can bring real benefits to the hiring process, but it also introduces new risks that may cause harm to jobseekers if it is not used lawfully and fairly." - Ian Hulme, Director of Assurance, Information Commissioner's Office (ICO)

Privacy concerns add another layer of complexity. Some AI tools scrape personal data and photos from social media without candidates’ consent or retain interview videos and biometric data indefinitely. While Illinois law requires employers to destroy interview videos within 30 days upon request, many companies fail to comply or neglect to inform candidates of this right.

Employers can rebuild trust by offering clear, plain-language explanations of how AI tools work and providing alternative assessment methods for those who opt out. Limiting data collection and clearly communicating retention policies are also critical steps. These measures not only ensure fairness but also help employers strike the right balance between automation and human oversight in hiring.

How scale.jobs Reduces AI Hiring Risks

Navigating the pitfalls of AI-driven hiring tools can be daunting for job seekers. While some platforms like LazyApply and Simplify.jobs rely heavily on automation, scale.jobs takes a different route. By prioritizing human oversight over algorithms, scale.jobs ensures your application isn’t derailed by the biases or errors often associated with AI systems.

Human Assistants Handle Every Application

At scale.jobs, trained virtual assistants manually manage every application. This hands-on approach avoids the common pitfalls of automated systems, such as triggering bot-detection filters or misinterpreting nuanced qualifications. Instead of letting algorithms decide, human assistants ensure your application is tailored and effectively presented to recruiters.

Here’s what sets scale.jobs apart from automated platforms:

- Tailored customization to accurately represent non-traditional career paths or unique qualifications.

- Human oversight to prevent discrimination based on age, race, disabilities, or other factors.

- Guaranteed delivery of applications without being flagged or rejected by automated systems.

- Nuanced presentation of skills and experiences that AI tools might overlook.

- Legal compliance with human-review requirements under federal and state hiring laws.

This approach directly addresses concerns about biased AI filtering, which has led to lawsuits in the past. By focusing on fairness and transparency, scale.jobs not only reduces regulatory risks but also builds trust with job seekers.

Real-Time Updates and Proof of Work

One of the biggest frustrations with automated hiring tools is the lack of transparency. Candidates are often left wondering why they were rejected or how their data was used. To address this, scale.jobs provides real-time updates via WhatsApp, along with time-stamped screenshots and a detailed tracking dashboard. This ensures you have complete visibility into your application process.

This level of documentation also aligns with legal requirements under laws like New York City’s Local Law 144 and the Colorado AI Act. With scale.jobs, you’ll know exactly when and where your application was submitted, leaving no room for uncertainty.

ATS-Optimized Resumes and Cover Letters

Applicant tracking systems (ATS) are notorious for rejecting resumes before they even reach a recruiter. In fact, 75% of resumes are filtered out due to formatting issues or missing keywords. scale.jobs tackles this problem by creating resumes and cover letters that are ATS-compliant while still appealing to human reviewers. This ensures your documents not only pass through automated filters but also stand out to recruiters.

Unlike some AI recruiting tools, which have been criticized for embedding bias - such as a 2018 system that penalized resumes mentioning "women's" - scale.jobs combines ATS optimization with human review. This thoughtful balance ensures your application is both effective and fair, giving you the best chance to succeed.

Best Practices for Employers Using AI Tools

Employers using AI-driven interview systems are under increasing legal scrutiny from both state and federal regulators. To navigate these challenges and reduce legal and ethical risks, adopting strategic practices is essential. For instance, New York City's AEDT Law imposes fines ranging from $375 to $1,500 per violation for failing to conduct yearly bias audits. Similarly, California's ADS Regulations mandate keeping all AI-related documentation for at least four years. Additionally, the Department of Justice now includes AI risk management in corporate compliance evaluations, making proactive steps crucial for compliance.

Run Regular Bias Audits

Bias audits shouldn't be a one-time task. Regularly test AI tools to identify any disparate impact by analyzing selection rates across gender, race, and other intersectional categories. Use the EEOC's "four-fifths" rule: if the selection rate for a protected group is less than 80% of the highest group's rate, the tool could be non-compliant with Title VII. Before purchasing AI tools, examine vendor-provided selection rates, assessment reports, and Model Cards that explain the training data and any known limitations. If audits uncover bias, collaborate with vendors to adjust algorithms, include more training data for underrepresented groups, or consider alternative tools.

Protect Candidate Data

In addition to conducting bias audits, safeguarding candidate data is equally important. Conduct a Data Protection Impact Assessment (DPIA) early on to identify privacy risks. Limit the collection of personal information to essentials like name, skills, and qualifications, and avoid gathering data from social media or online photos without the candidate's consent. Recent reviews have shown the risks of inferring sensitive traits from minimal data inputs.

Set clear data retention policies and communicate them to candidates. Update privacy notices to inform candidates about the use of AI in the hiring process, explaining its purpose and potential impact. Offer candidates the option to opt out of AI-driven analytics, such as emotion detection in video interviews, and provide a manual review alternative upon request.

Combine Automation with Human Oversight

AI should never be the sole decision-maker in hiring. Train HR teams to interpret AI outputs, spot potential misuse, and challenge algorithmic predictions when needed. Upcoming regulations, such as Colorado's AI Act (effective June 2026) and California's ADS Regulations (effective October 2025), require employers to exercise "reasonable care" by incorporating meaningful human review into AI-driven hiring processes.

"Effective AI governance is now a critical component of a robust corporate compliance program, requiring proactive measures and continuous adaptation to emerging risks." – Department of Justice (DOJ)

Before fully deploying AI tools, run pilot programs with diverse participants to evaluate how the tools perform in real-world scenarios compared to human benchmarks. For candidates with disabilities or those who speak English as a second language, ensure there is a manual review option when AI tools have known accuracy limitations. Establish clear procedures for candidates to contest automated decisions or provide additional context. Keep detailed records of risk assessments, mitigation efforts, and human oversight activities to strengthen your legal defense if issues arise. These steps not only enhance your hiring process but also align with oversight solutions available through platforms like scale.jobs.

Conclusion

Why Employers Need to Address AI Risks Now

The clock is ticking for employers to ensure their hiring practices align with emerging legal standards. Starting June 30, 2026, the Colorado AI Act will require employers to exercise "reasonable care" to prevent algorithmic discrimination in hiring. Adding to the urgency, the Department of Justice has started factoring AI risk management into its evaluations of corporate compliance programs, influencing prosecutorial decisions.

The consequences for non-compliance are far-reaching. Recent class-action lawsuits and settlements with the EEOC highlight the liability faced by both employers and AI vendors when algorithms unlawfully exclude protected groups. Beyond legal repercussions, companies risk losing public trust and damaging their reputations. A stark example comes from the UK Information Commissioner's Office, which issued nearly 300 recommendations to AI developers in 2024 after uncovering tools that unfairly screened out candidates based on protected characteristics.

"There is no AI exemption to the laws on the books." – Joint Statement from EEOC, DOJ, CFPB, and FTC

"AI can bring real benefits to the hiring process, but it also introduces new risks that may cause harm to jobseekers if it is not used lawfully and fairly." – Ian Hulme, Director of Assurance, Information Commissioner's Office (ICO)

With 73% of HR managers relying on AI for recruitment as of late 2025, the stakes are higher than ever. Employers must act quickly to implement hiring solutions that prioritize fairness and human oversight, avoiding the pitfalls of unchecked automation.

scale.jobs: A Human-Powered Alternative

In this evolving regulatory environment, scale.jobs emerges as a standout option for ethical hiring practices. Unlike fully automated platforms that risk algorithmic bias, scale.jobs prioritizes human oversight to meet the "meaningful human review" requirement outlined in new state regulations.

The platform offers a hands-on approach, with trained human virtual assistants managing every application. Features include ATS-optimized documents, real-time updates via WhatsApp, and straightforward, flat-fee pricing starting at $199 for 250 applications. By integrating human validation at every step, scale.jobs addresses both legal and ethical concerns, offering a solution that minimizes the risks associated with automated systems.

For job seekers worried about being unfairly excluded due to factors like age, ethnicity, or disability, scale.jobs provides a safer, more inclusive alternative. This human-powered service eliminates the "digital exclusion" issues increasingly flagged by regulators, ensuring that every candidate gets a fair shot in the hiring process.

FAQs

How can employers demonstrate their AI hiring tools are unbiased?

Employers can show that their AI hiring tools are fair by conducting bias testing using diverse data sets. This process helps identify and address any potential disparities in the AI's decision-making. Additionally, maintaining transparency about how the AI operates and adhering to established guidelines that focus on fairness, regular bias assessments, and human oversight can further reinforce trust. Following recommendations from well-regarded organizations ensures compliance and fosters confidence in the hiring process.

What candidate data can AI interview tools legally collect and keep?

AI-powered interview tools are permitted to collect and store candidate data, including video or audio recordings, personal information, and responses, as long as they secure explicit consent from the candidates and adhere to data privacy laws. It's crucial for these tools to manage this data responsibly to prevent any breaches or improper use.

How can job seekers request a human review or ADA accommodations?

Job seekers should directly reach out to employers or recruiters to request a human review or accommodations under the Americans with Disabilities Act (ADA), especially when AI tools are part of the hiring process. According to ADA and Equal Employment Opportunity Commission (EEOC) guidelines, applicants are entitled to request reasonable accommodations to ensure a fair evaluation. These accommodations might include alternative formats, extended time for assessments, or a human-led review of their application. To streamline the process, it’s best to communicate these needs as early as possible, ideally during the application stage or when scheduling an interview.