AI in Hiring: Accessibility Challenges and Solutions

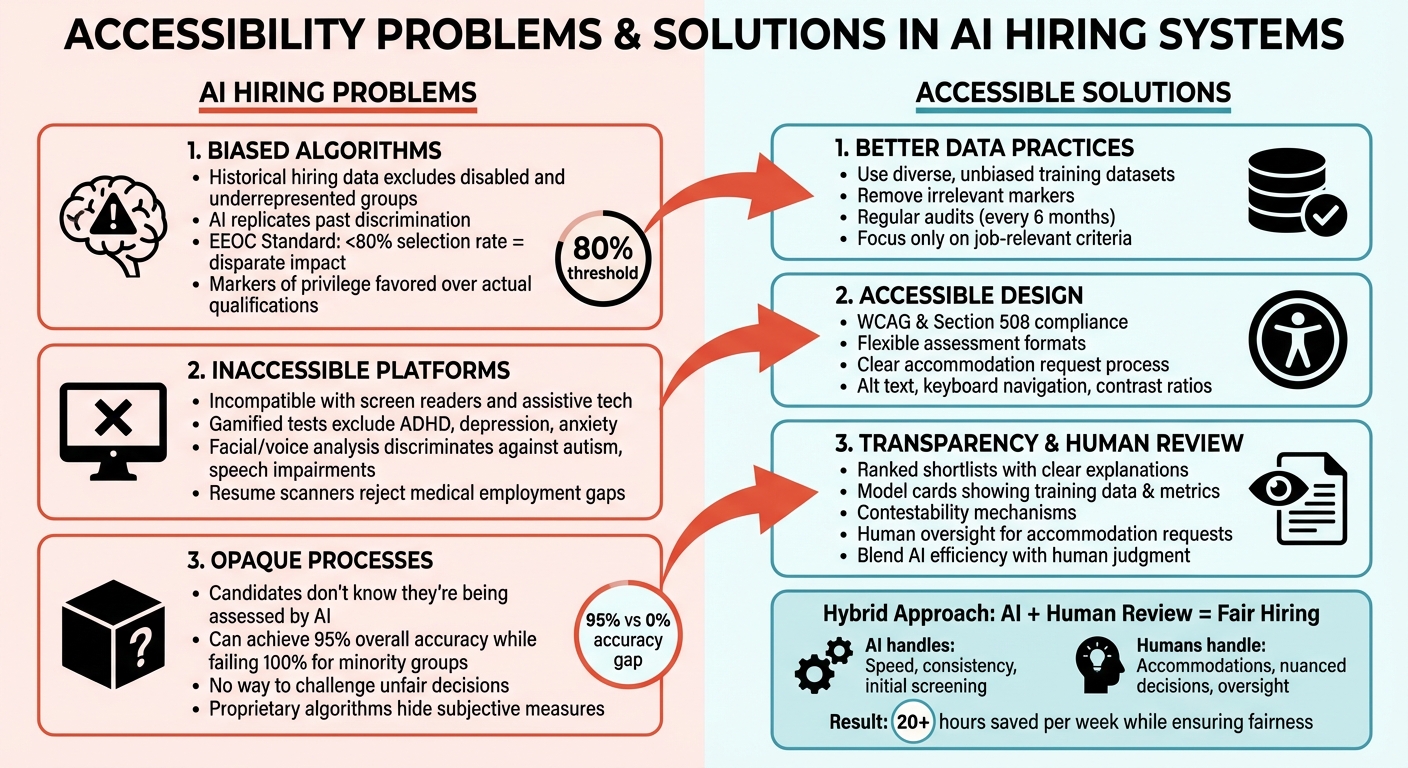

How AI hiring tools can exclude disabled and underrepresented candidates - fixes include diverse data, accessible platforms, transparent models, and human review.

AI is reshaping how companies hire, but it’s not without flaws. While AI brings speed and consistency to recruitment, it often creates barriers for disabled and underrepresented candidates. These issues stem from biased algorithms, inaccessible platforms, and opaque decision-making processes.

Key problems include:

- Biased training data: Historical hiring patterns often exclude marginalized groups, leading AI to replicate discrimination.

- Inaccessible platforms: Many systems don’t work with assistive technologies, disadvantaging disabled applicants.

- Lack of transparency: Candidates rarely know how decisions are made, making it hard to address unfair rejections.

Solutions exist. By using diverse datasets, designing accessible platforms, and ensuring transparency, companies can improve AI-driven hiring tools. Pairing AI with human oversight is critical to address gaps that automation alone cannot solve. For example, tools like scale.jobs combine AI efficiency with human review to help candidates navigate these challenges.

The future of hiring depends on addressing these accessibility gaps while leveraging AI’s potential to streamline processes.

3 Core Accessibility Problems in AI Hiring Systems and Their Solutions

Morning DisabiliTEA - AI in Recruiting, Bias & Why the Future Is Still Human

Accessibility Problems in AI Hiring Systems

AI has made hiring more efficient, but it often creates barriers for disabled and underrepresented job seekers. These hurdles arise from three major issues: algorithms trained on biased historical data, platforms that fail to support assistive technologies, and opaque decision-making processes that leave candidates guessing. These problems highlight the need to rethink AI systems to ensure they work for everyone.

Biased Algorithms and Flawed Training Data

AI hiring tools are trained to recognize what a "good" employee looks like by analyzing past hiring decisions and existing staff performance. The issue? Historical hiring data often excludes people with disabilities and underrepresented groups, so these candidates are underrepresented - or entirely absent - in the datasets. As the U.S. Department of Justice points out:

"Because people with disabilities have historically been excluded from many jobs and may not be a part of the employer's current staff, this may result in discrimination."

This bias means algorithms tend to rank similar candidates lower, perpetuating the cycle of exclusion. AI can also pick up on markers of privilege that have little to do with actual job qualifications. For example, factors like participation in niche sports may favor candidates from wealthier backgrounds, while tools that analyze facial expressions or voice patterns can unfairly penalize individuals with autism, speech impairments, or different communication styles.

Under the EEOC's Uniform Guidelines, a hiring process shows disparate impact if the selection rate for a protected group is less than 80% of the rate for the group with the highest selection rate. Fixing these biases is essential to building fairer hiring systems.

Application Platforms That Don't Work for Everyone

Many AI-powered application platforms are incompatible with the assistive technologies that disabled candidates rely on. Tools that use screen-readers, facial analysis, or spoken responses often exclude blind, paralyzed, autistic, or deaf applicants, as well as those with manual or cognitive impairments.

Gamified personality tests add another layer of exclusion. These tests, designed to evaluate traits like "optimism" or "attention span", frequently filter out candidates with conditions such as ADHD, depression, or anxiety - even when these conditions have no impact on job performance. Automated resume scanners can also reject applicants with employment gaps, which are often the result of medical treatments, like cancer therapy. The U.S. Department of Justice warns:

"If a county government uses facial and voice analysis technologies to evaluate applicants' skills and abilities, people with disabilities like autism or speech impairments may be screened out, even if they are qualified for the job."

A lack of transparency compounds the problem. Employers rarely disclose what technologies they use or how evaluations are conducted. This leaves applicants unsure if they need to request accommodations, a right protected under the Americans with Disabilities Act. Beyond technical barriers, this secrecy erodes trust in AI-driven hiring processes.

Opaque Evaluation Processes in AI Screening

AI screening often hides how candidates are evaluated. Many job seekers don’t know they’re being assessed by an algorithm, let alone what traits the system measures. This lack of visibility makes it impossible for candidates to spot potential bias or request accommodations. As researchers Manish Raghavan and Solon Barocas (Brookings) explain:

"Validation may report that a model performs very well overall while concealing that it performs very poorly for a minority population."

For instance, an AI model could achieve 95% overall accuracy while failing 100% of the time for a minority group that makes up just 5% of the dataset. This "differential validity" means the system might work well for most candidates but completely fail disabled applicants or other underrepresented groups - yet the aggregate data wouldn’t reveal the issue.

The lack of transparency also makes it impossible to challenge unfair decisions. Many proprietary algorithms rely on subjective measures of success, such as past performance reviews, which may already include human bias. The Center for Democracy & Technology cautions:

"As these algorithms have spread in adoption, so, too, has the risk of discrimination written invisibly into their codes."

Without knowing how decisions are made, rejected candidates can’t contest or correct the reasoning behind their rejection. This leaves qualified individuals shut out of opportunities they deserve. Addressing these barriers is a crucial step toward creating fair and accessible hiring practices powered by AI.

How AI Can Fix Accessibility in Hiring

AI has the potential to break down barriers in hiring. By retraining machine learning systems to identify diverse talent, redesigning platforms to support assistive technologies, and making algorithms more transparent, we can create fairer hiring processes. The challenge lies in moving away from systems that replicate past biases to ones that actively promote equity.

Better Data Practices to Reduce Bias

Diverse and unbiased datasets are key. AI learns from historical hiring data, so including examples of diverse employees helps it recognize talent in all its forms. Developers should also remove irrelevant markers, like those unrelated to job skills, forcing the system to focus on more inclusive predictors of success.

Audits ensure fairness across all groups. Regular checks can reveal if an AI system performs inconsistently for certain populations. For example, under the EEOC's Uniform Guidelines, a hiring process is problematic if it accepts candidates from a protected group at less than 80% of the rate for another group. Even a system with high overall accuracy might completely fail smaller groups, as noted by Manish Raghavan and Solon Barocas from Cornell University:

"Algorithms, by their nature, do not question the human decisions underlying a dataset. Instead, they faithfully attempt to reproduce past decisions, which can lead them to reflect the very sorts of human biases they are intended to replace."

Focus on job-relevant criteria. The ADA mandates that hiring tools evaluate only the skills and abilities necessary for the job, not unrelated factors like sensory or manual capabilities. For instance, subjective performance reviews - often laden with bias - should be avoided as predictors.

Beyond data, platforms must be designed to accommodate diverse user needs.

Job Platforms That Work for All Users

Accessibility standards like WCAG and Section 508 are crucial. AI can enhance accessibility by generating alt text, improving contrast ratios for low-vision users, and enabling keyboard navigation for those unable to use a mouse.

Assessment formats must be flexible. If a video interview or gamified test is inaccessible to someone with autism, paralysis, or a speech impairment, the platform should provide alternative ways to evaluate the same skills. As the U.S. Department of Justice emphasizes:

"Employers must ensure that any such tests or games measure only the relevant skills and abilities of an applicant, rather than reflecting the applicant's impaired sensory, manual, or speaking skills that the tests do not seek to measure."

Transparency about accommodations is essential. Candidates should have a clear and visible way to request reasonable accommodations without fear of penalty. This process should be simple and accessible before they interact with AI tools.

Once accessibility and bias are addressed, the next step is ensuring transparency in decision-making.

Clear Explanations of AI Decisions

Transparent AI builds trust. Instead of relying on mysterious "black-box" algorithms, hiring systems should provide ranked shortlists with clear explanations for each decision. Blind, rubric-based scoring ensures consistency and focuses evaluations on job-related competencies. As Sapia.ai, an AI hiring platform, warns:

"If hiring teams can't see why candidates are ranked the way they are, there will be decision-slowing disputes. Black-box algorithms might claim to be AI-powered, but they're a compliance nightmare."

Model cards provide standardized insights. These tools outline key details about AI models, such as training data, performance metrics, and potential risks. Employers should disclose which technologies are being used and what traits are being evaluated, allowing candidates to determine if they need accommodations under the ADA.

Contestability mechanisms are vital for fairness. Every system should include a way for candidates to challenge AI-driven decisions. Without this, individuals have no recourse when algorithms fail them. Human review processes ensure that applicants can question and address unfair outcomes.

Tests That Accommodate Different Needs

Real-time adjustments make assessments fairer. Platforms can tailor test parameters based on candidate needs. For example, someone with ADHD might require extra time, while a candidate with a visual impairment might need a screen-reader-compatible format.

Avoiding discriminatory data ensures fairness. AI should ignore irrelevant factors, like employment gaps caused by medical treatment, which have no bearing on job performance. As the Center for Democracy & Technology points out:

"If an employer uses selection criteria that screen out disabled candidates, the criteria must be 'job-related' and 'consistent with business necessity.'"

Human oversight strengthens AI decisions. While AI can rank or filter candidates efficiently, human reviewers should engage meaningfully with the system's outputs. By questioning recommendations that conflict with fairness goals, this approach blends automation's speed with human judgment and accountability.

Combining AI with Human Review

Achieving fair and accessible hiring systems requires blending AI with human judgment. Even the most advanced algorithms can overlook critical nuances, such as a candidate’s speech impairment or a medically justified gap in employment. By pairing automation with human oversight, companies can create a safety net that addresses these gaps and ensures a more equitable hiring process.

When Human Review Matters Most

Accommodation requests demand human intervention for quick and thoughtful solutions. AI systems aren’t equipped to handle requests like alternative testing formats or accessible interview tools. For instance, if a candidate with autism asks to take a text-based assessment instead of participating in a video interview that analyzes facial expressions, a human recruiter must step in to make that adjustment. The U.S. Department of Justice underscores this point:

"If a test or technology eliminates someone because of disability when that person can actually do the job, an employer must instead use an accessible test that measures the applicant's job skills, not their disability, or make other adjustments to the hiring process."

Human review also addresses unfair screening and corrects algorithmic blind spots. For example, AI transcription tools might boast 97% accuracy for native English speakers but struggle significantly when processing the speech of non-native speakers or those with strong accents. Similarly, systems that analyze vocal cues may unfairly disqualify candidates with speech impairments. By offering a manual review option, companies can ensure that candidates who disclose disabilities are evaluated by someone who understands their unique context.

Under the EEOC’s Uniform Guidelines, a selection process is considered discriminatory if it accepts candidates from one protected group at a rate lower than 80% of another group. An AI system might claim 95% overall accuracy but perform no better than random chance for a minority population. Regular audits - ideally every six months - can help identify these disparities, but it takes human expertise to interpret the findings and decide when to override the system. As the UK Department for Science, Innovation & Technology points out:

"Employees must be able to meaningfully engage with the outputs of a system to feel confident in acting on the AI-enabled prediction, decision or recommendation."

This synergy between automation and human insight naturally leads to platforms that blend both approaches, like scale.jobs.

scale.jobs: AI Tools Plus Human Support

Recognizing the importance of human oversight, scale.jobs combines AI-driven tools with human expertise to tackle these challenges. Many AI-powered application systems unintentionally filter out qualified candidates before their resumes even reach a recruiter. Scale.jobs addresses this issue by offering a mix of free software, AI customization, and human-assisted services designed to help candidates navigate these barriers.

Human assistants fill the gaps left by AI screening. Starting at $199 for 250 applications, trained professionals handle tasks like completing applications accurately, optimizing resumes for ATS systems, crafting tailored cover letters, and submitting applications on time across various platforms. Features like real-time WhatsApp updates, time-stamped proof-of-work screenshots, and refunds for unused credits ensure transparency. This flat-fee model eliminates the need for recurring subscriptions and frees up over 20 hours a week for candidates to focus on networking and interview preparation.

The service is particularly valuable for candidates facing visa-related challenges. For individuals navigating complex visa situations - such as H1B, F1, CPT, TN, O1, or EB1A visas - or pursuing Canada PR or UK Global Talent Visas, having a human assistant ensures that applications correctly highlight visa status and avoid common errors that trigger automatic rejections. It’s also a game-changer for recent graduates, laid-off workers, and frequent job changers, as experts can help frame employment gaps or frequent moves in a way that appeals to recruiters.

Conclusion: Building Fair Hiring Systems with AI

Creating fair AI-driven hiring systems requires a mix of transparency, regular checks, and human oversight. Employers should clearly inform applicants about the specific AI tools in use and the criteria being applied to evaluate them. Routine audits play a key role in identifying disparities and reducing legal risks.

Accessible alternatives are crucial to comply with ADA requirements. Employers need to ensure that their software works seamlessly with screen readers, provide non-automated interview options when requested, and establish clear, non-punitive processes for handling accommodation requests.

Addressing the limitations of automation, hybrid screening offers a balanced solution. Experts suggest blending AI with human judgment to ensure fairness. This approach is especially important when assessing candidates with speech impairments, autism, or other conditions that AI might misinterpret or penalize unfairly. The human element brings nuanced understanding that algorithms can't replicate.

A hybrid model combining AI precision with human insight stands out. For instance, Scale.jobs exemplifies this approach by offering free AI tools - such as ATS-friendly resume builders and single-click tailored resumes - alongside human assistants who manually complete applications to avoid bot-related issues. This method ensures that complex needs, like visa status disclosures or accommodation requests, are handled thoughtfully, going beyond what algorithms can achieve. It highlights the importance of balancing technical efficiency with human expertise.

Inclusive hiring systems must prioritize accessibility at their core. Employers are legally accountable for discriminatory practices caused by AI tools, even if those tools come from third-party vendors. This makes it essential to scrutinize hiring technologies both before and during their use. By focusing on diverse datasets, multi-faceted assessments, and transparent decision-making, companies can build recruitment processes that identify top talent without perpetuating past biases. The future of inclusive hiring lies in merging advanced AI capabilities with the irreplaceable judgment of human professionals.

FAQs

How can companies make AI hiring tools accessible to candidates with disabilities?

To make sure AI hiring tools are inclusive, companies need to follow the Americans with Disabilities Act (ADA) and EEOC guidelines, which emphasize equal access and reasonable accommodations for candidates with disabilities. This means AI systems should not create obstacles like inaccessible video platforms, unlabeled images, or interfaces that rely solely on text.

Here’s how businesses can take practical steps:

- Design tools that align with accessibility standards like WCAG 2.1 AA.

- Offer alternative formats, such as captions for videos or extra time for assessments.

- Make it easy for candidates to request accommodations during the hiring process.

Additionally, companies should test AI models for potential bias by using diverse datasets, require vendors to confirm their tools meet ADA compliance, and train hiring teams on inclusive practices. By addressing accessibility proactively, businesses can ensure fairer hiring processes while also reaching a wider pool of talented candidates.

How can companies reduce bias in AI-powered hiring processes?

Reducing bias in AI-powered hiring begins with a thorough review of both the data and algorithms behind recruitment tools. Companies need to analyze their historical hiring data to spot and address any patterns of bias, particularly those affecting protected groups like race, gender, or disability. Using training datasets that reflect a wide range of perspectives and identities is critical, along with consistent monitoring to catch and correct hidden biases.

Equally important is integrating human oversight and ensuring accessibility throughout the process. Recruiters should have the ability to evaluate and question AI-generated recommendations to ensure hiring decisions align with fairness and equity goals. Recruitment tools should also meet accessibility standards by providing accommodations for candidates with disabilities. By blending transparent practices, diverse datasets, and active human involvement, businesses can work toward a hiring process that is both fair and inclusive.

Why is it important to have human oversight in AI-driven hiring?

Human involvement plays a crucial role in AI-driven hiring to ensure fairness, inclusivity, and adherence to legal standards. While AI systems excel at quickly screening and ranking candidates, they can unintentionally reinforce biases or fail to address the needs of individuals with disabilities. Human reviewers step in to catch and address these issues, using their judgment to prevent discrimination and ensure accessibility.

Blending AI with human oversight also enhances the hiring process's quality and clarity. Hybrid platforms - like Scale.jobs - combine AI tools with manual review to deliver error-free, ATS-compliant resumes, accurate application submissions, and a smoother experience for candidates. This mix of automation and human insight builds trust and ensures a more balanced, effective, and fair hiring process for everyone involved.